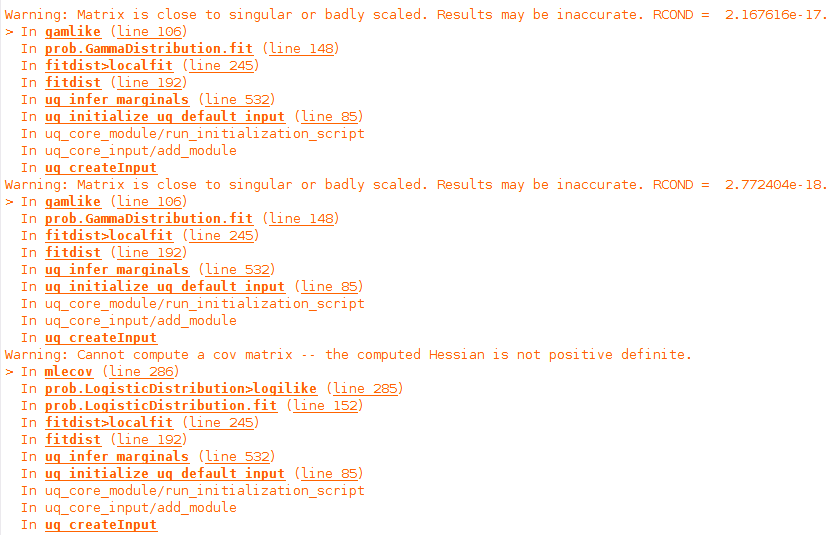

My question would be: How many samples per variable are needed in order to accurately infer their distributions? Is there any rule of thumb of such sort? When choosing a large number of samples I often seem to stumble upon cases of badly conditioned matrices:

Warning: Cannot compute a cov matrix -- the computed Hessian is not positive definite.

Hi @ChrisP

There is no simple answer to your question

This depends on the “true” (and unknown) distribution the variable follows and what you are trying to do with the inferred distribution. If the variable follows a Gaussian the number of points required will be quite small but if your variable exhibits complex tail behavior and you are trying to model this behavior, the number of points can be huge.

A straightforward approach to address this problem would be to incrementally increase the number of supplied points and see if the inferred distributions change significantly. For this, you can have a look at the goodness of fit measures described in the statistical inference manual.

Regarding the error you mention, could you possibly provide a minimum working example that results in this error? I’d be happy to have a look.

If I understand correctly, the warnings shown below mean that my sample has a really bad fit on certain distributions. If that is true, then there isn’t really a problem as long as at least one of the distributions fits the data adequately

Hi @ChrisP

This is indeed possible, but without a minimum working example, it is hard to give a definitive answer

Dear @ChrisP

Thanks for your question.

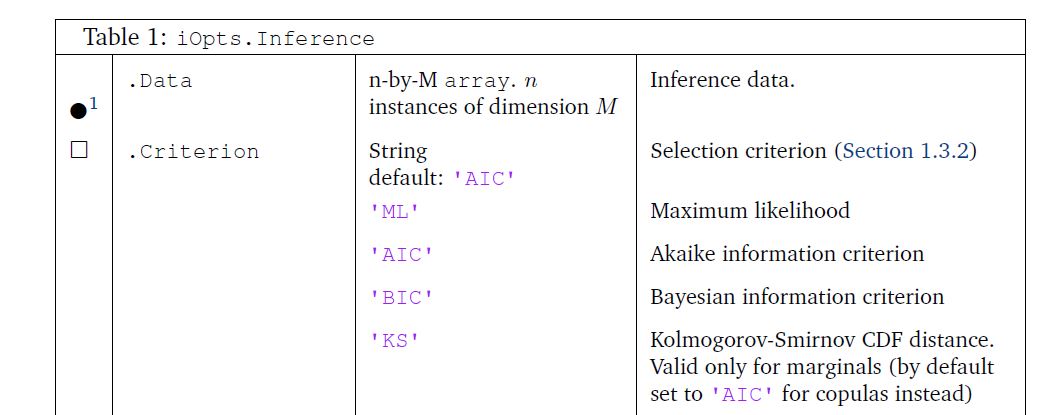

Would you mind please try all of the possible criteria for marginal and copula inference which mentioned in the UserManual_Inference in the Table1 and the Table9 (for the copula inference)?

Marginal Inference:

If you have feedback on these features, I would love to hear it.

Best regards

Ali

Hi @ChrisP,

UQLab uses Matlab’s fitdist function to fit the marginal distributions. It sometimes issues these warnings if it has trouble fitting the distribution to the data (it seems mostly related to the confidence intervals which fitdist computes in addition to the marginal parameters).

As long as the final inferred marginal types are not the types that produced these warnings, you should be fine. If the inferred types did produce warnings, you should check the fit e.g. by plotting the inferred marginal against a histogram of your data – I did some small tests and it seemed that often the fit was still looking fine (so it’s not even necessarily the case that your sample “has a really bad fit” on these distributions).

We’ll add a note on this to the next edition of the inference user manual. Thank you for pointing this out.

It’s actually interesting that you observe these warnings for large numbers of samples, but not for small numbers (if I understand your description correctly). In principle, the more samples the better! We did not investigate why exactly these matrices become singular or not positive definite for large sample sizes. If anyone has insights on this, feel free to share!