Dear Paul,

thanks for your answer with this elaborated discussion and your suggestion to reparametrize the problem. I will apply this to my real world application.

I view of your remark that the AIES MSMC samplers has less problems with the hyperbolic trench as other MCMC smaplers I would like to point out some observation that surprised me somehow:

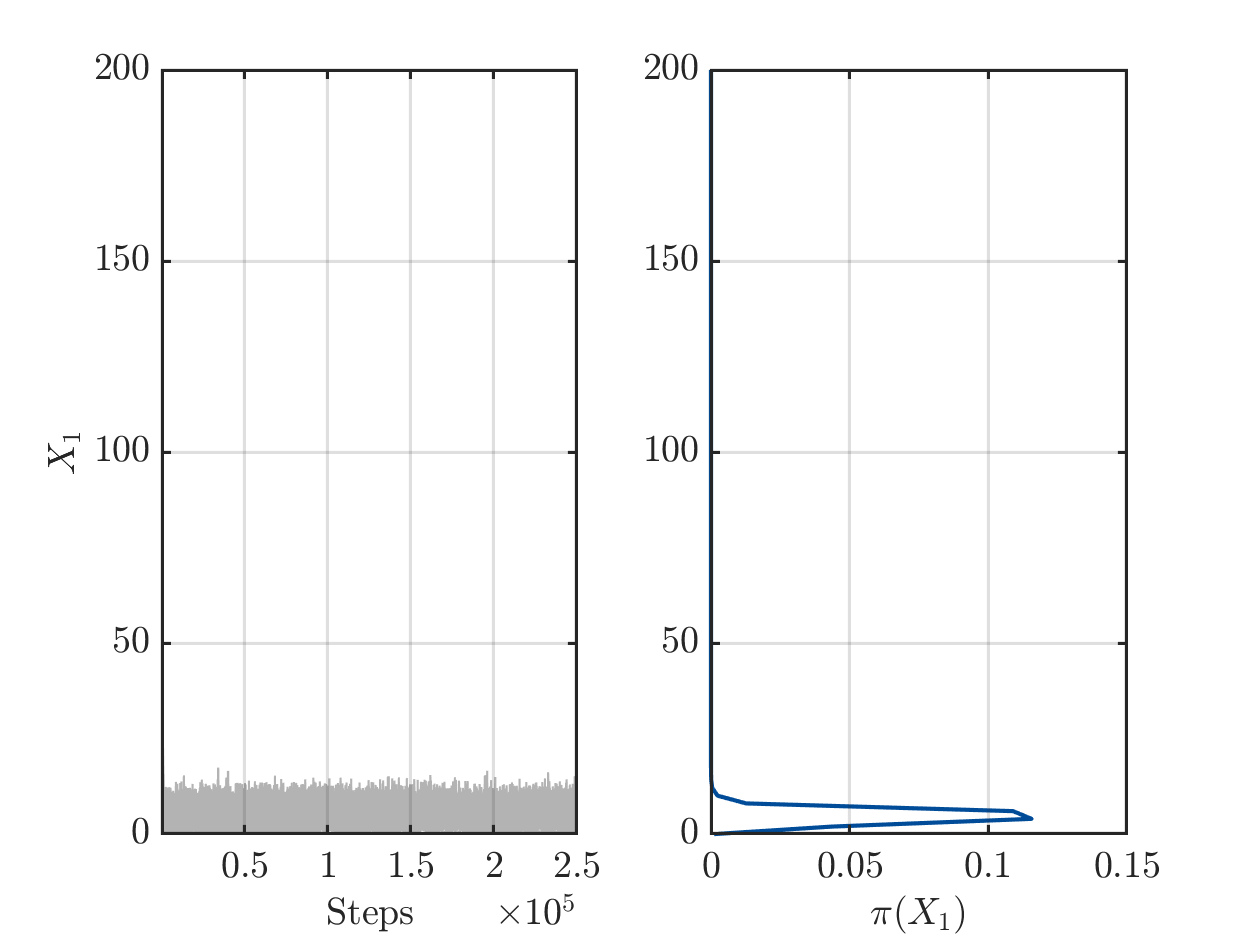

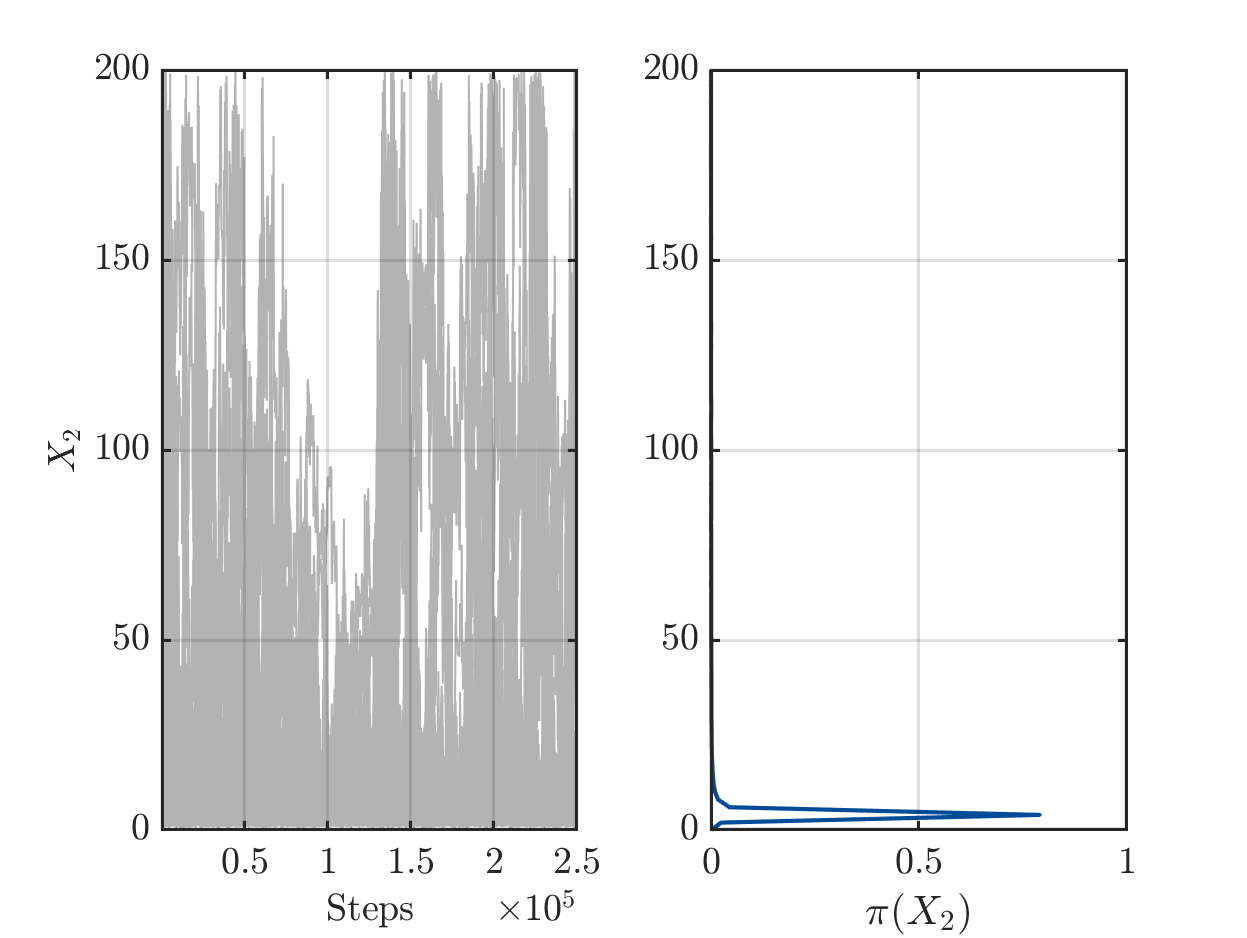

Since I believed that the model output at the mean of the parameter samples would be in the discrete posterior predictive support if the numbers of steps became sufficiently large, I increased the value of Solver.MCMC.Steps up to 25,000, but could not observe this behavior. This behavior was still not observed after I increased Solver.MCMC.Steps even further to 250,000. After more the 72 hours of computing time got I the following plots for the MCMC samples with a strange observation in the second plot, i.e. the one for X2:

I try to figure out if this change of behavior for the samples for X2 at around step 110,000 can be a real result of the algorithm or if it may be an indication that there may be some well hidden error somewhere?

What do you think?

Many thanks again

Greetings

Olaf