Hi! Everyone. I am doing a classic simply-support-beam question.

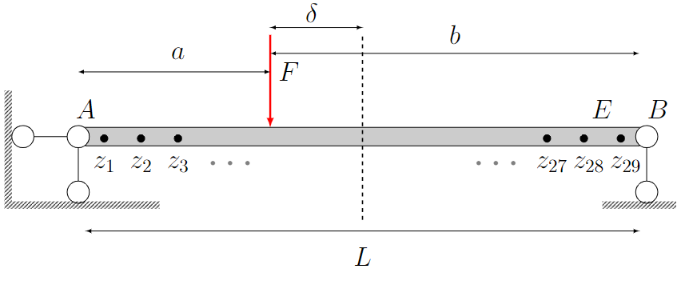

The input variables are Elastic modulus E and loading position \delta. I need to create my PCE surrogate model and doing Bayesian inference based on the 29 monitored data points along the beam.

This is my procedure:

Step 1: Refer to PCE - Calculation strategies | Examples | UQLab, create my PCE

Step 2: Bayesian inversion - MAP estimation | Examples | UQLab, doing my Bayesian inference to get E and \delta

The code works well and it converges very well for the simple beam problem. But I have a question for the result I get.

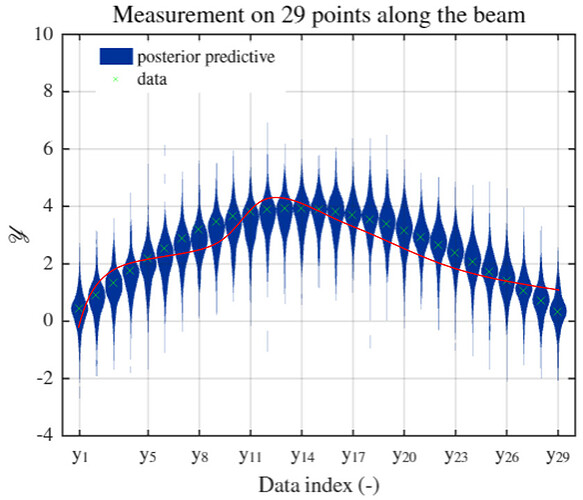

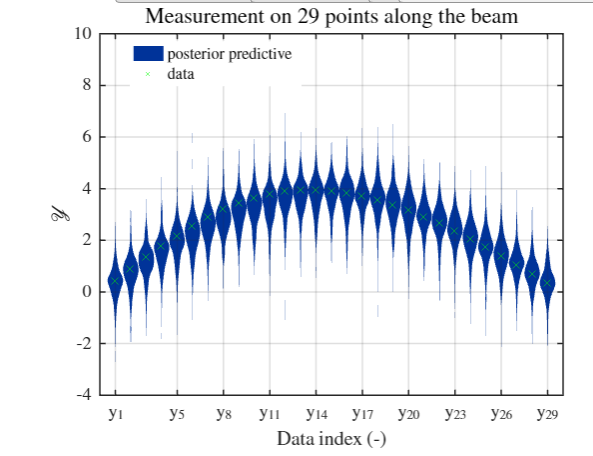

(please ignore the big range of predictive distribution. It should be very small. I make it very large on purpose to make it obvious)

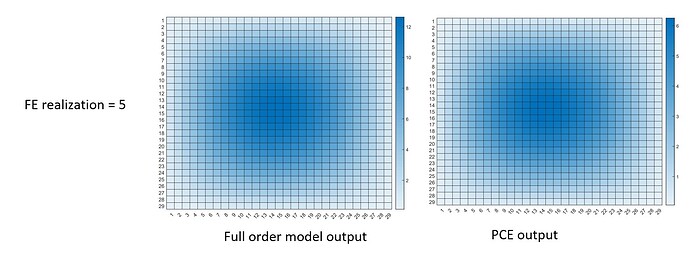

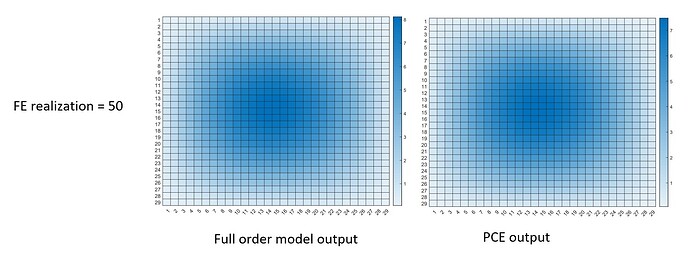

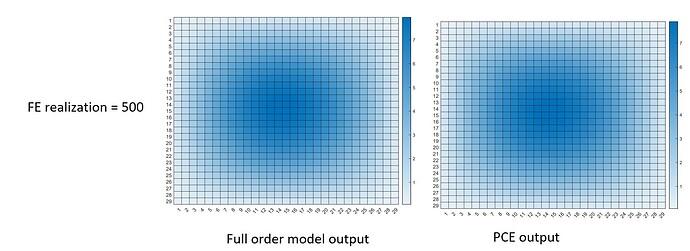

Anyway, in the PCE that I create, based on the 29 output points along the beam, I get a 29 independent sub-PCE model in the PCE model, right? Because it mentioned “UQLAB performs an independent PCE for each output component on the shared experimental design” in the UQLab - Polynomial chaos expansions (PCE) user manuals.

So, my question is : Since the 29 sub-PCE (though combined in one PCE structure) for each point along the beam is independent, how can we make reasonable predictive posterior? for example, as shown the red line in the picture, it is reasonable in probabilistic theory but unrealistic in life. Or in another way, can we consider the relationship between 29 sub-PCEs ? or how we avoid this unrealistic predictive outcome?

I personally think the problem comes from the fact "every PCE model is independent ", they each have no connections. Can someone help me this question? thanks in advance!!!

code is attached

PCE_multiple.zip (265.8 KB)